|

Zheng Wang I am a first year PhD student from Computer Science, University of Illinois Urbana-Champaign

, and my advisor is Prof. Minjia Zhang. My current research interests focus on the following two directions: Prior to joining UIUC, I was very fortunate to be advised by Prof. Yingyan (Celine) Lin of EIC Lab as a Research Assistant from School of Computer Science, Georgia Tech. Outside of my academic life, I like to stay healthy by working out regularly. I'm also really into playing 🎾 tennis 🎾 —it's a fun, challenging sport that keeps me both physically and mentally sharp.Email / Google Scholar / |

|

Research |

|

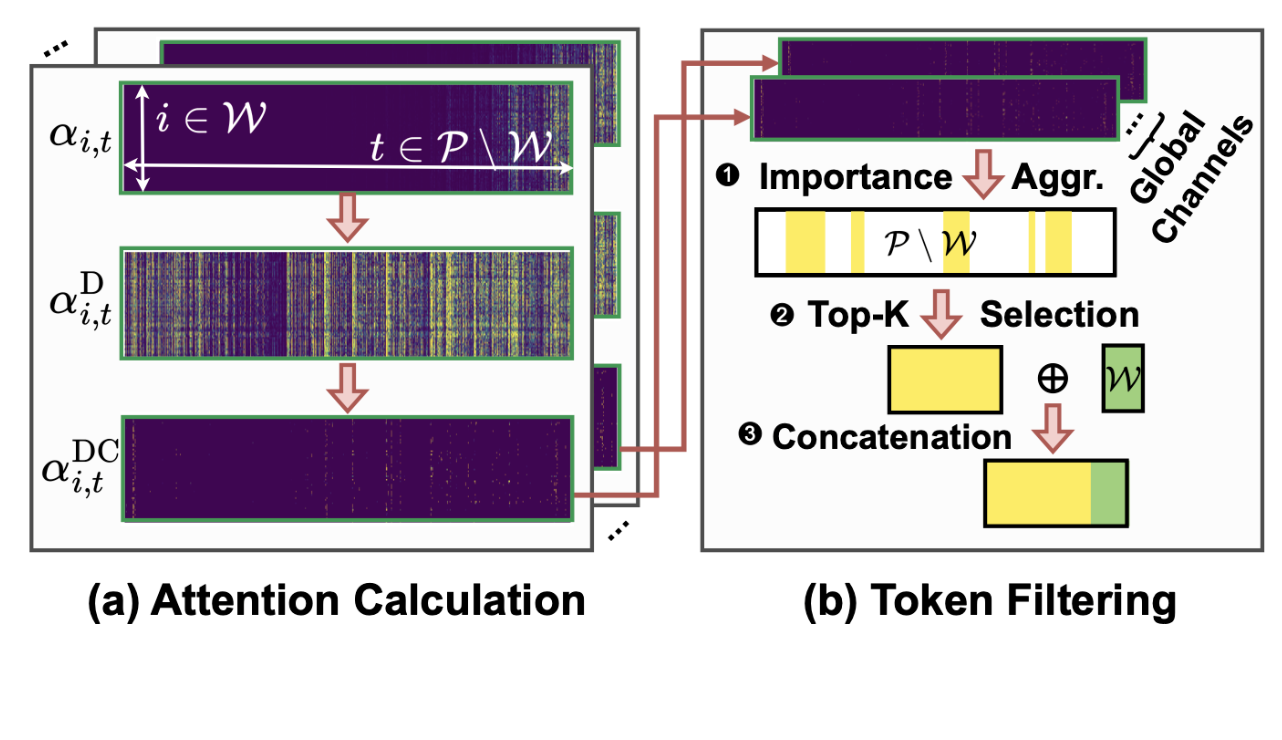

LAMB: A Training-Free Method to Enhance the Long-Context Understanding of SSMs via Attention-Guided Token FilteringZhifan Ye, Zheng Wang, Kejing Xia, Jihoon Hong, Leshu Li, Lexington A. Whalen, Cheng Wan, Haoran You, Celine Lin, Souvik Kundu 63rd Annual Meeting of the Association for Computational Linguistics, ACL 2025 |

|

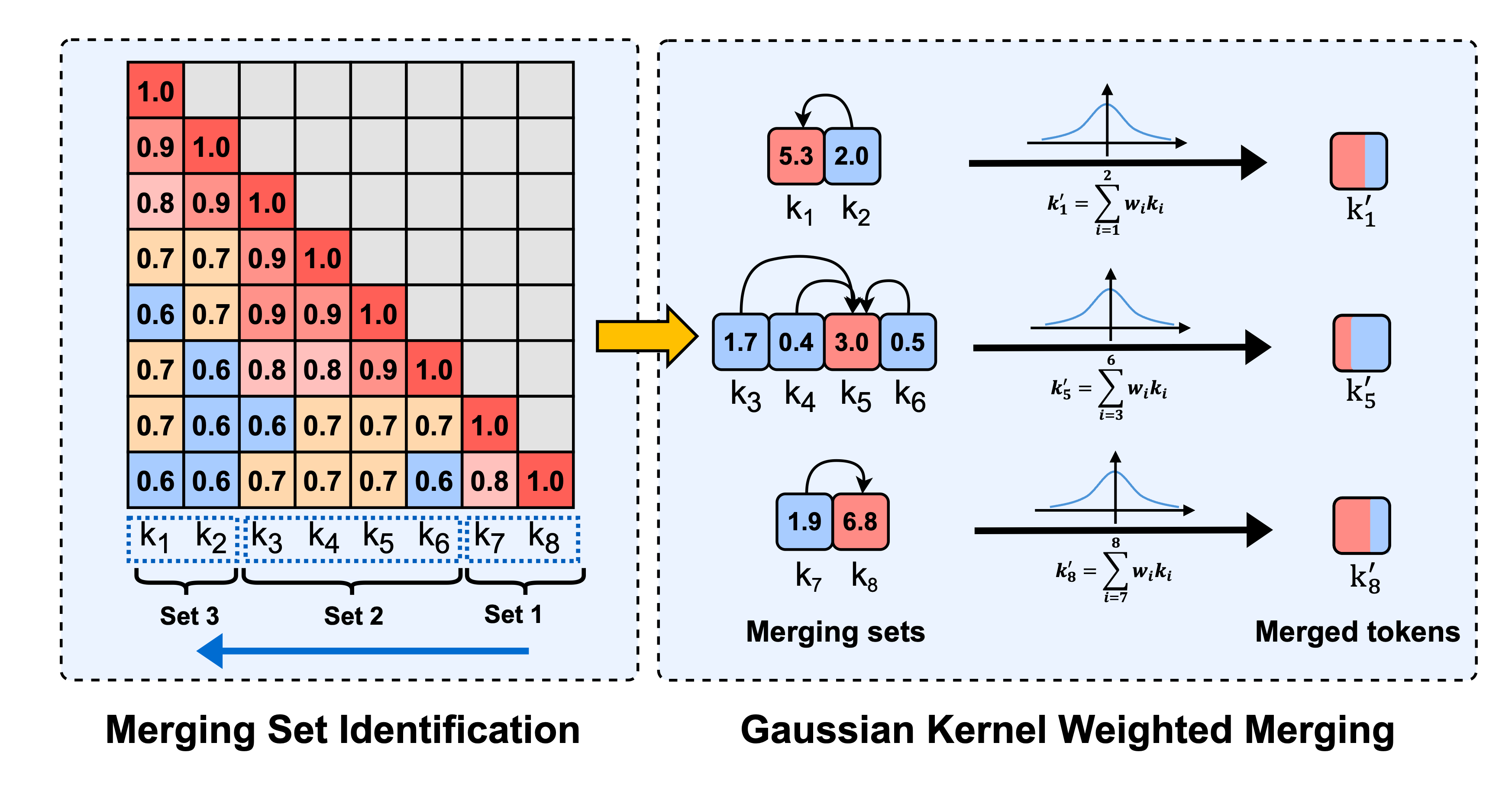

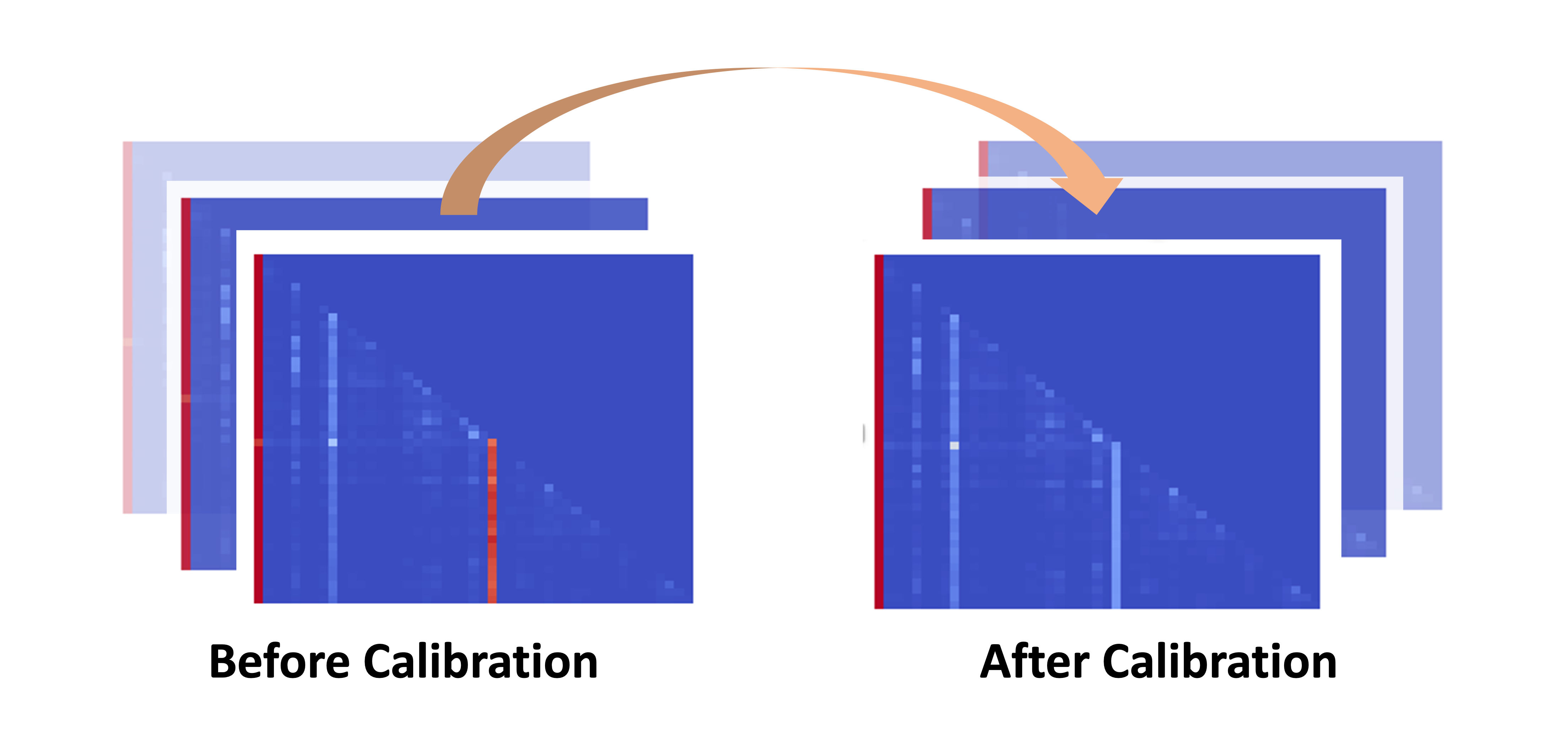

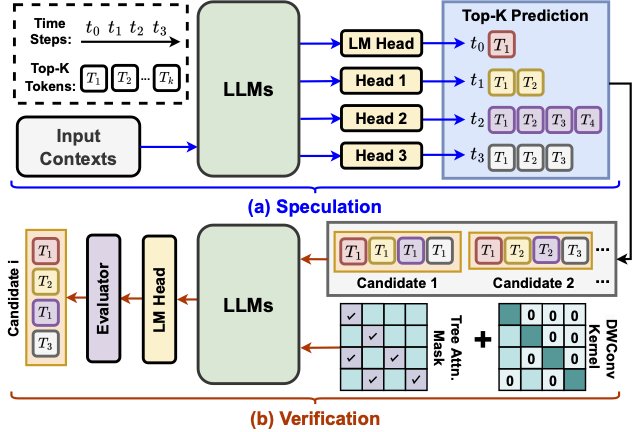

Model Tells You Where to Merge: Adaptive KV Cache Merging for LLMs on Long-Context TasksZheng Wang, Boxiao Jin, Zhongzhi Yu, Minjia Zhang preprint |

|

|

|

|

|

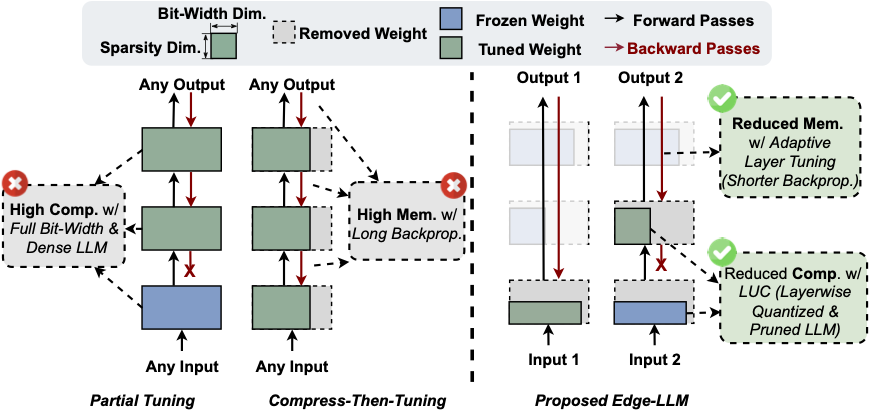

EDGE-LLM: Enabling Efficient Large Language Model Adaptation on Edge Devices via Layerwise Unified Compression and Adaptive Layer Tuning & VotingZhongzhi Yu, Zheng Wang, Yuhan Li, Haoran You, Ruijie Gao, Xiaoya Zhou, Sreenidhi Reedy Bommu, Yang Katie Zhao, Yingyan Celine Lin 61st ACM/IEEE Design Automation Conference, DAC 2024 |

|

Teaching

|

Services

|

Selected Awards

|

|

Design and source code from jonbarron. |